Common backtesting problems and how to avoid them

On 27 October 2024 - tagged evaluation

Backtesting is arguably the most important part of quantitative research. Its precision is critical for decision making in the research process and for fund allocation. If your backtest is not precise enough, you might invest time or capital into trading a strategy that simply doesn't work. Or you can give up work on a strategy with great potential. For certain kinds of strategies, backtesting precision can be very high and the results trustworthy, but some backtests should not be trusted at all. But how to distinguish between the two? Here are the most common backtesting issues and how to avoid them:

Insufficient data #

For a trustworthy backtesting, you need a good amount of data. It shouldn't be measured just by 'years of data', but mostly by amount of trades done. So for HFT order book strategy with 55% success rate you might get away with just 2 months of data on which the strategy would make 10 000 trades. For long-term trend strategy on crypto, I wouldn't settle for less than eg. 5 years of data and 200 trades. It might be a good idea to have both bull and bear market periods in the data or both trending and mean-reverting market period in order to make the data 'balanced'.

Having several assets (tokens/stocks) helps too, but as the assets are usually heavily correlated, it's much more valuable to have 5 years of 1 asset than 1 year of 5 assets.

Survivorship bias #

If you choose only assets available for trading now, you miss all the assets that went to zero in the past. If your strategies are long-biased, e.g. long trend following, they will perform much better on assets popular today. But if you include even data for assets popular in the past that got bankrupt or delisted, your strategy might turn out to be not even profitable.

Pro-tip: if you backtest such a long-biased or even long-only strategy on just a few assets, backtest it on assets that were popular near the start of the backtesting period, not the ones popular nowadays.

Look-ahead bias #

Look-ahead bias is a very common issue in backtesting. It happens when you use some future data for trading decision. For instance, you might use candle close price in

your decision process and then trade for its open price. This is common, because candles are usually marked with their open price, so it is easy to mark the trade as

happening at the time of candle open. More complex cases of look-ahead bias can happen when you merge different data sources with different time zones or when you use the

pandas.shift() method.

The general way to prevent look-ahead is to use event-based backtesting framework that will prevent the strategy logic from accessing the future data. The disadvantage of event-based backtesting is that it is much slower, which can be problematic especially for parameter optimization, walk-forward testing or complex robustness tests.

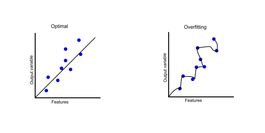

Overfitting #

Overfitting happens when your strategy or its parameters are too closely fitted to the training data or recent historical development of your assets. It can happen both during automated parameter optimization (especially in machine-learning) or even manually by the quant. Overfitted strategies show very good performance on historical data, but perform poorly in live trading.

Overfitting issues are so common and hard to tackle, that there is a whole overfitting series on this blog starting with this article, and we can recommend reading it for every quant, not just the ones using machine-learning or other optimization techniques.

Slippage, spread, fees and execution costs #

Trading incurs several types of costs. The most basic one is the trading fee charged by the exchange, but that's not the only cost.

When using market orders, you also pay part of the bid-ask spread size. This is not visible in all kinds of data, for instance in candlestick data, your close price can randomly be from either buy or sell trade depending on the direction of the last trade in the candle. For assets with significant spread, the spread can be even larger than 1%. So for example if you're trading altcoins, you should get data that contain the bid-ask spread and account for it in your backtest. For high-cap assets, the spread is usually much smaller, so you can ignore it or account for a small fixed spread.

When your trades have size significant to the quantity on best bid/ask price in the order book, your trade might actually sweep through the order book and execute for worse than the best bid/ask price. This 'slippage' is often significant and should be considered in backtesting. In real-trading, you can minimize it by executing the trade in several parts, but it should always be simulated in the backtest, as you might lose some of the 'backtested profitability' by executing the trade later.

Sometimes people assume that they will execute the trade using maker (or also resting) limit orders and therefore earn the spread instead of paying it while also saving on fees. This is however not so easy. You will miss the profitable trades when price will move in the direction of your order while immediately executing limit orders when price moves in the other way. This 'adverse selection' effect is stronger than one would think and can be very costly on smaller-cap assets with wide spreads.

Latency #

Many trading signals realize very quickly, which means any delay in the signal execution can be very costly. For example breakout strategy signal can be very profitable at the exact moment of the breakout, but after just a few seconds, the price might be so far off, that executing the signal would not be profitable at all. This is why it's helpful to simulate your execution delay in the backtest.

You can use the next 1-minute candle close instead of the current candle price to implement this. For shorter-term strategies, you can use order book data a few milliseconds after the signal is generated.

General takeaways #

When you are backtesting a short-term strategy (holding period of less than a few hours) or altcoin strategy (e.g. with average spread > 10 bps), it's a good practice to use order book data with at least the best price levels (this is also called 'level 1' or 'BBO' data) for precision.

If your strategy is supposed to trade larger capital than quantities usually available on the best price levels, you should use 'level 2' order book data. The easiest form of those data to work with are either 10 or 20-level deep snapshots. Then you can calculate the exact slippage you would have when trading your desired size. It might seem that the market has sufficient liquidity, but at the times when you trade, in volatility or after significant events, the available liquidity might drop pretty significantly.

New posts are announced on X/twitter @jan_skoda or @crypto_lake_com, so follow us!